I'm Not a Robot

How reCAPTCHA Works

Are you a robot? You’ve probably answered this question a thousand times when filling out a form, for example. Sometimes it comes with an annoying challenge. And how stupid does it feel when you don’t give the right answer. How do these reCAPTCHA tests work, and is it really just to check that you’re not a robot?

How to Distinguish a Human from a Bot

First, let’s look at the definition. CAPTCHA stands for Completely Automated Public Turing test to tell Computers and Humans Apart. A “Turing test” is an experiment proposed in 1950 by Alan Turing to test whether a machine exhibits human intelligence. The machine passes if you cannot tell if it is a human or a computer. CAPTCHA is actually the opposite of a Turing test because it is a way to verify that a human is not a bot.

CAPTCHA was invented by Luis Von Ahn. In 2000, he attended a lecture at Yahoo! There he learned that Yahoo needed a way to distinguish humans from bots because many spam bots were trying to register e-mail accounts.

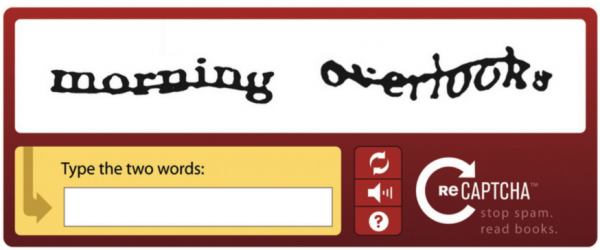

Von Ahn accepted this challenge. In his research, he discovered that people are pretty good at reading things on different surfaces. For example, we read road signs and handwritten text without any problems. Therefore, the first CAPTCHA test consisted of a stretched, scribbled word with a line through it.

Training the Language Model

In 2005, CAPTCHA was upgraded to reCAPTCHA. The test now consisted of two words. The first word was like the old test to distinguish humans from bots. The second word was used to make the Artificial Intelligence (AI) system behind reCAPTCHA smarter. The new word was taken from a book or article. If humans got the first word right, it was very likely that the second word was also right. Especially if other people gave the same answer. If so, reCAPTCHA’s language model had learned a new word.

After Google bought reCAPTCHA in 2009, its learning capabilities increased rapidly. Google used reCAPTCHA to scan millions of books and articles to then train a machine learning model. They created a huge image library of distorted words and characters. And the more people used the test, the smarter the model became. It got so smart that at one point reCAPTCHA’s AI could recognize distorted words from new images.

When AI gets too Smart

As reCAPTCHA’s language model improved, reCAPTCHA tests were made more difficult so people could still prove they were not robots. But at some point, machines got better at recognizing distorted words. In 2014, humans could guess the correct words with about 33% accuracy. AI knew the answer with 99.8% accuracy. That was when reCAPTCHA V2 was developed.

With reCAPTCHA V2, you have to check a box and do an image challenge. Why? Because humans are better at recognizing objects in different areas, angles and weather conditions than machines. Once again, people were able to prove they were not bots.

As before, the test was used for machine learning. This time, the AI was taught to recognize real-world objects such as traffic lights, crosswalks and street signs. Most of the objects are traffic-related because Google uses this information to train their self-driving cars to recognize them. It is also used to digitize street names and numbers on Google Maps.

Behavior Testing

The AI of reCAPTCHA outsmarted us again as it got better at recognizing objects. That’s why Google developed NoCAPTCHA (an improved V2 version) and reCAPTCHA V3. These new versions verify people based on their behavior. How do you move your mouse before you click? How fast do you type? Do you have a browser history? By constantly tracking your behavior on the Web, the test can figure out whether you are a human or a robot.

In NoCAPTCHA, you still have to do a challenge if the system is not sure you are a bot. In reCAPTCHA V3, no interaction is required at all. It simply returns a score to validate whether you are a human or a bot. If this score is too low, a website owner can, for example, improve the website by adding another authentication factor. It is a blind Turing test that constantly monitors your behavior in the background.

Final Thoughts

It is a good thing that we can ban malicious bots that try to make purchases, leave comments or create fake accounts. However, it’s a scary thought that AI can so quickly outsmart us by recognizing text and objects in images. What if they can also outsmart us with our (website) behavior? Moreover, tracking our behavior raises questions about privacy. Can our information just be used to improve AI? And also: how will our information be used? Is it just for profit, like selling self-driving cars? Or is it used for the common good, like reducing traffic accidents? It’s not so black or white, but we need to keep an eye on it.

Want to Learn More?

Want to read, watch or listen more about this topic? Then check out the links below.