Ik ben geen robot

Hoe reCAPTCHA werkt

Ben jij een robot? Je hebt deze vraag vast al duizend keer beantwoord als je bijvoorbeeld een formulier invult. Soms zit er een vervelende uitdaging bij. En hoe stom voelt het als je niet het juiste antwoord geeft. Hoe werken deze reCAPTCHA tests en is het echt alleen maar om te controleren of je geen robot bent?

Hoe onderscheid je een mens van een bot?

Laten we eerst eens kijken naar de definitie. CAPTCHA staat voor Completely Automated Public Turing test to tell Computers and Humans Apart. Een ‘Turing Test’ is een experiment dat in 1950 werd voorgesteld door Alan Turing om te testen of een machine menselijke intelligentie vertoont. De machine slaagt als je niet kunt zien of het een mens of een computer is. CAPTCHA is eigenlijk het tegenovergestelde van een Turingtest, omdat het een manier is om te controleren of een mens geen bot is.

CAPTCHA is uitgevonden door Luis Von Ahn. In 2000 woonde hij een lezing bij bij Yahoo!. Daar hoorde hij dat Yahoo een manier nodig had om mensen van bots te onderscheiden, omdat veel spam bots probeerden e-mailaccounts te registreren.

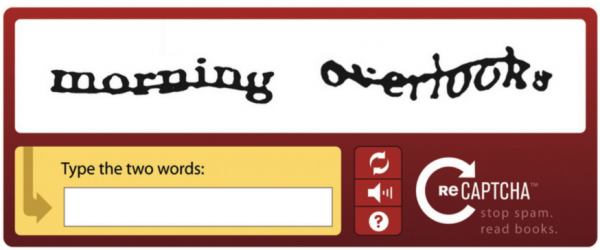

Von Ahn nam deze uitdaging aan. In zijn onderzoek ontdekte hij dat mensen vrij goed zijn in het lezen van dingen op verschillende oppervlakken. We lezen bijvoorbeeld verkeersborden en handgeschreven tekst zonder problemen. Daarom bestond de eerste CAPTCHA-test uit een uitgerekt, gekrabbeld woord met een streep erdoor.

Het taalmodel trainen

In 2005 werd CAPTCHA geüpgraded naar reCAPTCHA. De test bestond nu uit twee woorden. Het eerste woord was zoals de oude test om mensen te onderscheiden van bots. Het tweede woord werd gebruikt om het Artificial Intelligence (AI) systeem achter reCAPTCHA slimmer te maken. Het nieuwe woord werd uit een boek of artikel gehaald. Als de mens het eerste woord goed had, was het zeer waarschijnlijk dat het tweede woord ook goed was. Vooral als andere mensen hetzelfde antwoord gaven. Als dat zo was, had het taalmodel van reCAPTCHA een nieuw woord geleerd.

Nadat Google reCAPTCHA in 2009 had gekocht, namen de leercapaciteiten snel toe. Google gebruikte reCAPTCHA om miljoenen boeken en artikelen te scannen om vervolgens een machine leer-model te trainen. Ze creëerden een enorme afbeeldingenbibliotheek met vervormde woorden en tekens. En hoe meer mensen de test gebruikten, hoe slimmer het model werd. Het werd zo slim dat op een gegeven moment de AI van reCAPTCHA vervormde woorden uit nieuwe afbeeldingen kon herkennen.

Wanneer AI te slim wordt

Naarmate het taalmodel van reCAPTCHA verbeterde, werden de reCAPTCHA tests moeilijker gemaakt zodat mensen nog steeds konden bewijzen dat ze geen robots waren. Maar op een gegeven moment werden machines beter in het herkennen van vervormde woorden. In 2014 konden mensen de juiste woorden raden met een nauwkeurigheid van ongeveer 33%. AI wist het antwoord met 99,8% nauwkeurigheid. Dat was het moment waarop reCAPTCHA V2 werd ontwikkeld.

Met reCAPTCHA V2 moet je een vakje aankruisen en een beelduitdaging doen. Waarom? Omdat mensen beter zijn in het herkennen van objecten in verschillende gebieden, hoeken en weersomstandigheden dan machines. Opnieuw konden mensen bewijzen dat ze geen bots waren.

Net als eerder werd de test gebruikt voor machinaal leren. Deze keer werd de AI geleerd om objecten uit de echte wereld te herkennen, zoals verkeerslichten, zebrapaden en straatnaamborden. De meeste objecten zijn verkeersgerelateerd omdat Google deze informatie gebruikt om hun zelfrijdende auto’s te trainen in het herkennen van deze objecten. Het wordt ook gebruikt om straatnamen en nummers op Google Maps te digitaliseren.

Gedrag testen

De AI van reCAPTCHA was ons weer te slim af toen deze beter werd in het herkennen van objecten. Daarom ontwikkelde Google NoCAPTCHA (een verbeterde V2-versie) en reCAPTCHA V3. Deze nieuwe versies verifiëren mensen aan de hand van hun gedrag. Hoe beweeg je je muis voordat je klikt? Hoe snel typ je? Heb je een browsergeschiedenis? Door voortdurend je gedrag op het web bij te houden, kan de test achterhalen of je een mens of een robot bent.

In NoCAPTCHA moet je nog steeds een uitdaging doen als het systeem er niet zeker van is dat je een bot bent. In reCAPTCHA V3 is er helemaal geen interactie nodig. Er wordt gewoon een score geretourneerd om te valideren of je een mens of een bot bent. Als deze score te laag is, kan een website-eigenaar bijvoorbeeld de website verbeteren door een andere authenticatiefactor toe te voegen. Het is een blinde Turingtest die je gedrag constant op de achtergrond volgt.

Conclusie

Het is een goede zaak dat we kwaadaardige bots kunnen verbieden die proberen aankopen te doen, commentaar achter te laten of nepaccounts aan te maken. Het is echter een enge gedachte dat AI ons zo snel te slim af is met het herkennen van tekst en objecten in afbeeldingen. Wat als ze ons ook te slim af zijn met ons (website)gedrag? Bovendien roept het volgen van ons gedrag vragen op over privacy. Kan onze informatie zomaar gebruikt worden om AI te verbeteren? En ook: hoe wordt onze informatie gebruikt? Is het alleen maar voor de winst, zoals het verkopen van zelfrijdende auto’s? Of wordt het gebruikt voor het algemeen belang, zoals het verminderen van verkeersongelukken? Het is niet zo zwart of wit, maar we moeten het wel in de gaten houden.

Meer weten?

Meer lezen, kijken of luisteren over dit onderwerp? Bekijk dan de onderstaande links.